Installation

This chapter introduces the steps to install PG-Strom.

Checklist

- Server Hardware

- It requires generic x86_64 hardware that can run Linux operating system supported by CUDA Toolkit. We have no special requirement for CPU, storage and network devices.

- note002:HW Validation List may help you to choose the hardware.

- GPU Direct SQL Execution needs NVME-SSD devices, or fast network card with RoCE support, and to be installed under the same PCIe Root Complex where GPU is located on.

- GPU Device

- PG-Strom requires at least one GPU device on the system, which is supported by CUDA Toolkit, has computing capability 6.0 (Pascal generation) or later;

- Please check at 002: HW Validation List - List of supported GPU models for GPU selection.

- Operating System

- PG-Strom requires Linux operating system for x86_64 architecture, and its distribution supported by CUDA Toolkit. Our recommendation is Red Hat Enterprise Linux or Rocky Linux version 9.x or 8.x series.

- GPU Direct SQL (with cuFile driver) needs the

nvidia-fsdriver distributed with CUDA Toolkit, and Mellanox OFED (OpenFabrics Enterprise Distribution) driver.

- PostgreSQL

- PG-Strom v5.0 requires PostgreSQL v15 or later.

- Some of PostgreSQL APIs used by PG-Strom internally are not included in the former versions.

- CUDA Toolkit

- PG-Strom requires CUDA Toolkit version 12.2update1 or later.

- Some of CUDA Driver APIs used by PG-Strom internally are not included in the former versions.

Steps to Install

The overall steps to install are below:

- Hardware Configuration

- OS Installation

- MOFED Driver installation

- CUDA Toolkit installation

- HeteroDB Extra Module installation

- PostgreSQL installation

- PG-Strom installation

- PostgreSQL Extensions installation

- PostGIS

- contrib/cube

OS Installation

Choose a Linux distribution which is supported by CUDA Toolkit, then install the system according to the installation process of the distribution. NVIDIA DEVELOPER ZONE introduces the list of Linux distributions which are supported by CUDA Toolkit.

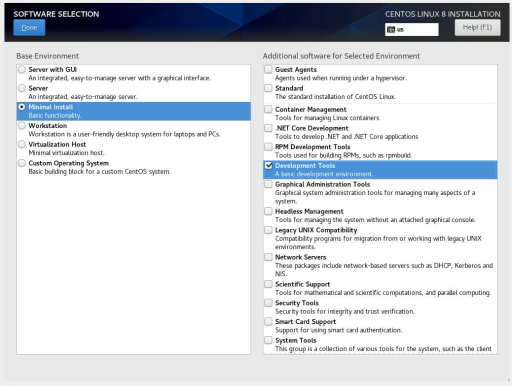

In case of Red Hat Enterprise Linux 8.x series (including Rocky Linux 8.x series), choose "Minimal installation" as base environment, and also check the "Development Tools" add-ons for the software selection

Next to the OS installation on the server, go on the package repository configuration to install the third-party packages.

If you didn't check the "Development Tools" at the installer, we can additionally install the software using the command below after the operating system installation.

# dnf groupinstall 'Development Tools'

Tip

If GPU devices installed on the server are too new, it may cause system crash during system boot.

In this case, you may avoid the problem by adding nouveau.modeset=0 onto the kernel boot option, to disable

the inbox graphic driver.

Disables nouveau driver

When the nouveau driver, that is an open source compatible driver for NVIDIA GPUs, is loaded, it prevent to load the nvidia driver. In this case, reboot the operating system after a configuration to disable the nouveau driver.

To disable the nouveau driver, put the following configuration onto /etc/modprobe.d/disable-nouveau.conf, and run dracut command to apply them on the boot image of Linux kernel.

Then, restart the system once.

# cat > /etc/modprobe.d/disable-nouveau.conf <<EOF

blacklist nouveau

options nouveau modeset=0

EOF

# dracut -f

# shutdown -r now

Disables IOMMU

GPU-Direct SQL uses GPUDirect Storage (cuFile) API of CUDA.

Prior to using GPUDirect Storage, it needs to disable the IOMMU configuration on the OS side.

Configure the kernel boot option according to the NVIDIA GPUDirect Storage Installation and Troubleshooting Guide description.

To disable IOMMU, add amd_iommu=off (for AMD CPU) or intel_iommu=off (for Intel CPU) to the kernel boot options.

Configuration at RHEL9

The command below adds the kernel boot option.

# grubby --update-kernel=ALL --args="amd_iommu=off"

Configuration at RHEL8

Open /etc/default/grub with an editor and add the above option to the GRUB_CMDLINE_LINUX_DEFAULT= line.

For example, the settings should look like this:

:

GRUB_CMDLINE_LINUX="rhgb quiet amd_iommu=off"

:

Run the following commands to apply the configuration to the kernel bool options.

-- for BIOS based system

# grub2-mkconfig -o /boot/grub2/grub.cfg

# shutdown -r now

-- for UEFI based system

# grub2-mkconfig -o /boot/efi/EFI/redhat/grub.cfg

# shutdown -r now

Enables extra repositories

EPEL(Extra Packages for Enterprise Linux)

Several software modules required by PG-Strom are distributed as a part of EPEL (Extra Packages for Enterprise Linux). You need to add a repository definition of EPEL packages for yum system to obtain these software.

One of the package we will get from EPEL repository is DKMS (Dynamic Kernel Module Support). It is a framework to build Linux kernel module for the running Linux kernel on demand; used for NVIDIA's GPU driver and related. Linux kernel module must be rebuilt according to version-up of Linux kernel, so we don't recommend to operate the system without DKMS.

epel-release package provides the repository definition of EPEL. You can obtain the package from the Fedora Project website.

-- For RHEL9

# dnf install https://dl.fedoraproject.org/pub/epel/epel-release-latest-9.noarch.rpm

-- For RHEL8

# dnf install https://dl.fedoraproject.org/pub/epel/epel-release-latest-8.noarch.rpm

-- For Rocky8/Rocky9

# dnf install epel-release

Red Hat CodeReady Linux Builder

Installation of MOFED (Mellanox OpenFabrics Enterprise Distribution) driver requires the Red Hat CodeReady Linux Builder repository which is disabled in the default configuration of Red Hat Enterprise Linux 8.x installation. In Rocky Linux, it is called PowerTools

To enable this repository, run the command below:

-- For RHEL9

# subscription-manager repos --enable codeready-builder-for-rhel-9-x86_64-rpms

-- For Rocky9

# dnf config-manager --set-enabled crb

-- For RHEL8

# subscription-manager repos --enable codeready-builder-for-rhel-8-x86_64-rpms

-- For Rocky8

# dnf config-manager --set-enabled powertools

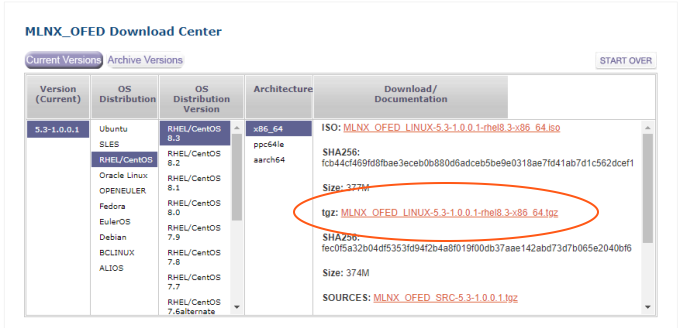

MOFED Driver Installation

You can download the latest MOFED driver from here.

This section introduces the example of installation from the tgz archive of MOFED driver version 23.10.

The installer software of MOFED driver requires the createrepo and perl packages at least.

After that, extract the tgz archive, then kick mlnxofedinstall script.

Please don't forget the options to enable GPUDirect Storage features.

# dnf install -y perl createrepo

# tar zxvf MLNX_OFED_LINUX-23.10-2.1.3.1-rhel9.3-x86_64.tgz

# cd MLNX_OFED_LINUX-23.10-2.1.3.1-rhel9.3-x86_64

# ./mlnxofedinstall --with-nvmf --with-nfsrdma --add-kernel-support

# dracut -f

During the build and installation of MOFED drivers, the installer may require additional packages.

In this case, error message shall guide you the missing packages. So, please install them using dnf command.

Error: One or more required packages for installing OFED-internal are missing.

Please install the missing packages using your Linux distribution Package Management tool.

Run:

yum install kernel-rpm-macros

Failed to build MLNX_OFED_LINUX for 5.14.0-362.8.1.el9_3.x86_64

Once MOFED drivers got installed, it should replace several INBOX drivers like nvme driver.

For example, the command below shows the /lib/modules/<KERNEL_VERSION>/extra/mlnx-nvme/host/nvme-rdma.ko that is additionally installed, instead of the INBOX nvme-rdma (/lib/modules/<KERNEL_VERSION>/kernel/drivers/nvme/host/nvme-rdma.ko.xz).

$ modinfo nvme-rdma

filename: /lib/modules/5.14.0-427.18.1.el9_4.x86_64/extra/mlnx-nvme/host/nvme-rdma.ko

license: GPL v2

rhelversion: 9.4

srcversion: 16C0049F26768D6EA12771B

depends: nvme-core,rdma_cm,ib_core,nvme-fabrics,mlx_compat

retpoline: Y

name: nvme_rdma

vermagic: 5.14.0-427.18.1.el9_4.x86_64 SMP preempt mod_unload modversions

parm: register_always:Use memory registration even for contiguous memory regions (bool)

Then, shutdown the system and restart, to replace the kernel modules already loaded (like nvme).

Please don't forget to run dracut -f after completion of the mlnxofedinstall script.

Tips

Linux kernel version up and MOFED driver

MODED drivers do not use DKMS (Dynamic Kernel Module Support) in RHEL series distributions. Therefore, when the Linux kernel is upgraded, you will need to perform the above steps again to reinstall the MOFED driver that is compatible with the new Linux kernel.

The Linux kernel may be updated together when another package is updated, such as when installing the CUDA Toolkit described below, but the same applies in that case.

heterodb-swdc Installation

PG-Strom and related packages are distributed from HeteroDB Software Distribution Center. You need to add a repository definition of HeteroDB-SWDC for you system to obtain these software.

heterodb-swdc package provides the repository definition of HeteroDB-SWDC.

Access to the HeteroDB Software Distribution Center using Web browser, download the heterodb-swdc-1.3-1.el9.noarch.rpm on top of the file list, then install this package. (Use heterodb-swdc-1.3-1.el8.noarch.rpm for RHEL8)

Once heterodb-swdc package gets installed, yum system configuration is updated to get software from the HeteroDB-SWDC repository.

Install the heterodb-swdc package as follows.

# dnf install https://heterodb.github.io/swdc/yum/rhel8-noarch/heterodb-swdc-1.2-1.el8.noarch.rpm

CUDA Toolkit Installation

This section introduces the installation of CUDA Toolkit. If you already installed the latest CUDA Toolkit, you can check whether your installation is identical with the configuration described in this section.

NVIDIA offers two approach to install CUDA Toolkit; one is by self-extracting archive (runfile), and the other is by RPM packages. We recommend the RPM installation for PG-Strom setup.

You can download the installation package for CUDA Toolkit from NVIDIA DEVELOPER ZONE. Choose your OS, architecture, distribution and version, then choose "rpm(network)" edition.

Once you choose the "rpm(network)" option, it shows a few step-by-step shell commands to register the CUDA repository and install the packages. Run the installation according to the guidance.

# dnf config-manager --add-repo https://developer.download.nvidia.com/compute/cuda/repos/rhel9/x86_64/cuda-rhel9.repo

# dnf clean all

# dnf install cuda-toolkit-12-5

Next to the installation of the CUDA Toolkit, two types of commands are introduced to install the nvidia driver.

Please use the open source version of nvidia-driver here. Only the open source version supports the GPUDirect Storage feature, and PG-Strom's GPU-Direct SQL utilizes this feature.

Tips

Use of Volta or former GPUs

The open source edition of the nvidia driver does not support Volta generation GPUs or former. Therefore, if you want to use PG-Strom with Volta or Pascal generation GPUs, you need to use CUDA 12.2 Update 1, whose proprietary driver supports GPUDirect Storage. The CUDA 12.2 Update 1 package can be obtained here.

Next, install the driver module nvidia-gds for the GPU-Direct Storage (GDS).

Please specify the same version name as the CUDA Toolkit version after the package name.

# dnf module install nvidia-driver:open-dkms

# dnf install nvidia-gds-12-5

Once installation completed successfully, CUDA Toolkit is deployed at /usr/local/cuda.

$ ls /usr/local/cuda/

bin/ gds/ nsightee_plugins/ targets/

compute-sanitizer/ include@ nvml/ tools/

CUDA_Toolkit_Release_Notes.txt lib64@ nvvm/ version.json

DOCS libnvvp/ README

EULA.txt LICENSE share/

extras/ man/ src/

Once installation gets completed, ensure the system recognizes the GPU devices correctly.

nvidia-smi command shows GPU information installed on your system, as follows.

$ nvidia-smi

Mon Jun 3 09:56:41 2024

+-----------------------------------------------------------------------------------------+

| NVIDIA-SMI 555.42.02 Driver Version: 555.42.02 CUDA Version: 12.5 |

|-----------------------------------------+------------------------+----------------------+

| GPU Name Persistence-M | Bus-Id Disp.A | Volatile Uncorr. ECC |

| Fan Temp Perf Pwr:Usage/Cap | Memory-Usage | GPU-Util Compute M. |

| | | MIG M. |

|=========================================+========================+======================|

| 0 NVIDIA A100-PCIE-40GB Off | 00000000:41:00.0 Off | 0 |

| N/A 58C P0 66W / 250W | 1MiB / 40960MiB | 0% Default |

| | | Disabled |

+-----------------------------------------+------------------------+----------------------+

+-----------------------------------------------------------------------------------------+

| Processes: |

| GPU GI CI PID Type Process name GPU Memory |

| ID ID Usage |

|=========================================================================================|

| No running processes found |

+-----------------------------------------------------------------------------------------+

Tips

Additional configurations for systems with NVSwitch

For systems with multiple GPUs that use NVSwitch for interconnect them, the nvidia-fabricmanager module must be installed.

If this package is not installed, cuInit(), which initializes CUDA, will fail with the CUDA_ERROR_SYSTEM_NOT_READY error, and PG-Strom will not start.

Run the following commands to install the nvidia-fabricmanager package.

(source)

# dnf install nvidia-fabricmanager

# systemctl enable nvidia-fabricmanager.service

# systemctl start nvidia-fabricmanager.service

Check GPUDirect Storage status

After the installation of CUDA Toolkit according to the steps above, your system will become ready for the GPUDirect Storage.

Run gdscheck tool to confirm the configuration for each storage devices, as follows.

(Thie example loads not only nvme, but nvme-rdma and rpcrdma kernel modules also, therefore, it reports the related features as Supported)

# /usr/local/cuda/gds/tools/gdscheck -p

GDS release version: 1.10.0.4

nvidia_fs version: 2.20 libcufile version: 2.12

Platform: x86_64

============

ENVIRONMENT:

============

=====================

DRIVER CONFIGURATION:

=====================

NVMe : Supported

NVMeOF : Supported

SCSI : Unsupported

ScaleFlux CSD : Unsupported

NVMesh : Unsupported

DDN EXAScaler : Unsupported

IBM Spectrum Scale : Unsupported

NFS : Supported

BeeGFS : Unsupported

WekaFS : Unsupported

Userspace RDMA : Unsupported

--Mellanox PeerDirect : Disabled

--rdma library : Not Loaded (libcufile_rdma.so)

--rdma devices : Not configured

--rdma_device_status : Up: 0 Down: 0

=====================

CUFILE CONFIGURATION:

=====================

properties.use_compat_mode : true

properties.force_compat_mode : false

properties.gds_rdma_write_support : true

properties.use_poll_mode : false

properties.poll_mode_max_size_kb : 4

properties.max_batch_io_size : 128

properties.max_batch_io_timeout_msecs : 5

properties.max_direct_io_size_kb : 16384

properties.max_device_cache_size_kb : 131072

properties.max_device_pinned_mem_size_kb : 33554432

properties.posix_pool_slab_size_kb : 4 1024 16384

properties.posix_pool_slab_count : 128 64 32

properties.rdma_peer_affinity_policy : RoundRobin

properties.rdma_dynamic_routing : 0

fs.generic.posix_unaligned_writes : false

fs.lustre.posix_gds_min_kb: 0

fs.beegfs.posix_gds_min_kb: 0

fs.weka.rdma_write_support: false

fs.gpfs.gds_write_support: false

profile.nvtx : false

profile.cufile_stats : 0

miscellaneous.api_check_aggressive : false

execution.max_io_threads : 4

execution.max_io_queue_depth : 128

execution.parallel_io : true

execution.min_io_threshold_size_kb : 8192

execution.max_request_parallelism : 4

properties.force_odirect_mode : false

properties.prefer_iouring : false

=========

GPU INFO:

=========

GPU index 0 NVIDIA A100-PCIE-40GB bar:1 bar size (MiB):65536 supports GDS, IOMMU State: Disabled

==============

PLATFORM INFO:

==============

IOMMU: disabled

Nvidia Driver Info Status: Supported(Nvidia Open Driver Installed)

Cuda Driver Version Installed: 12050

Platform: AS -2014CS-TR, Arch: x86_64(Linux 5.14.0-427.18.1.el9_4.x86_64)

Platform verification succeeded

Tips

Additional configuration for RAID volume

For data reading from software RAID (md-raid0) volumes by GPUDirect Storage,

the following line must be added to the /lib/udev/rules.d/63-md-raid-arrays.rules configuration file.

IMPORT{program}="/usr/sbin/mdadm --detail --export $devnode"

Then reboot the system to ensure the new configuration. See NVIDIA GPUDirect Storage Installation and Troubleshooting Guide for the details.

PCI Bar1 Memory Configuration

GPU-Direct SQL maps GPU device memory to the PCI BAR1 region (physical address space) on the host system, and sends P2P-RDMA requests to NVME devices with that as the destination for the shortest data transfer.

To perform P2P-RDMA with sufficient multiplicity, the GPU must have enough PCI BAR1 space to map the device buffer. The size of the PCI BAR1 area is fixed for most GPUs, and PG-Strom recommends products whose size exceeds the GPU device memory size.

However, some GPU products allow to change the size of the PCI BAR1 area by switching the operation mode. If your GPU is either of the following, refer to the NVIDIA Display Mode Selector Tool and switch to the mode that maximizes the PCI BAR1 area size.

- NVIDIA L40S

- NVIDIA L40

- NVIDIA A40

- NVIDIA RTX 6000 Ada

- NVIDIA RTX A6000

- NVIDIA RTX A5500

- NVIDIA RTX A5000

To check the GPU memory size and PCI BAR1 size installed in the system, use the nvidia-smi -q command. Memory-related status is displayed as shown below.

$ nvidia-smi -q

:

FB Memory Usage

Total : 46068 MiB

Reserved : 685 MiB

Used : 4 MiB

Free : 45377 MiB

BAR1 Memory Usage

Total : 65536 MiB

Used : 1 MiB

Free : 65535 MiB

:

HeteroDB extra modules

heterodb-extra module enhances PG-Strom the following features.

- multi-GPUs support

- GPUDirect SQL

- GiST index support on GPU

- License management

If you don't use the above features, only open source modules, you don't need to install the heterodb-extra module here.

Please skip this section.

Install the heterodb-extra package, downloaded from the SWDC, as follows.

# dnf install heterodb-extra

License activation

License activation is needed to use all the features of heterodb-extra, provided by HeteroDB,Inc. You can operate the system without license, but features below are restricted.

- Multiple GPUs support

- Striping of NVME-SSD drives (md-raid0) on GPUDirect SQL

- Support of NVME-oF device on GPUDirect SQL

- Support of GiST index on GPU-version of PostGIS workloads

You can obtain a license file, like as a plain text below, from HeteroDB,Inc.

IAgIVdKxhe+BSer3Y67jQW0+uTzYh00K6WOSH7xQ26Qcw8aeUNYqJB9YcKJTJb+QQhjmUeQpUnboNxVwLCd3HFuLXeBWMKp11/BgG0FSrkUWu/ZCtDtw0F1hEIUY7m767zAGV8y+i7BuNXGJFvRlAkxdVO3/K47ocIgoVkuzBfLvN/h9LffOydUnHPzrFHfLc0r3nNNgtyTrfvoZiXegkGM9GBTAKyq8uWu/OGonh9ybzVKOgofhDLk0rVbLohOXDhMlwDl2oMGIr83tIpCWG+BGE+TDwsJ4n71Sv6n4bi/ZBXBS498qShNHDGrbz6cNcDVBa+EuZc6HzZoF6UrljEcl=

----

VERSION:2

SERIAL_NR:HDB-TRIAL

ISSUED_AT:2019-05-09

EXPIRED_AT:2019-06-08

GPU_UUID:GPU-a137b1df-53c9-197f-2801-f2dccaf9d42f

Copy the license file to /etc/heterodb.license, then restart PostgreSQL.

The startup log messages of PostgreSQL dumps the license information, and it tells us the license activation is successfully done.

:

LOG: HeteroDB Extra module loaded [api_version=20231105,cufile=on,nvme_strom=off,githash=9ca2fe4d2fbb795ad2d741dcfcb9f2fe499a5bdf]

LOG: HeteroDB License: { "version" : 2, "serial_nr" : "HDB-TRIAL", "issued_at" : "2022-11-19", "expired_at" : "2099-12-31", "nr_gpus" : 1, "gpus" : [ { "uuid" : "GPU-13943bfd-5b30-38f5-0473-78979c134606" } ]}

LOG: PG-Strom version 5.0.1 built for PostgreSQL 15 (githash: 972441dbafed6679af86af40bc8613be2d73c4fd)

:

PostgreSQL Installation

This section introduces PostgreSQL installation with RPM.

We don't introduce the installation steps from the source because there are many documents for this approach, and there are also various options for the ./configure script.

PostgreSQL is also distributed in the packages of Linux distributions, however, it is not the latest one, and often older than the version which supports PG-Strom. For example, Red Hat Enterprise Linux 7.x distributes PostgreSQL v9.2.x series. This version had been EOL by the PostgreSQL community.

PostgreSQL Global Development Group provides yum repository to distribute the latest PostgreSQL and related packages. Like the configuration of EPEL, you can install a small package to set up yum repository, then install PostgreSQL and related software.

Here is the list of yum repository definition: http://yum.postgresql.org/repopackages.php.

Repository definitions are per PostgreSQL major version and Linux distribution. You need to choose the one for your Linux distribution, and for PostgreSQL v15 or later.

You can install PostgreSQL as following steps:

- Installation of yum repository definition.

- Disables the distribution's default PostgreSQL module

- Installation of PostgreSQL packages.

# dnf install -y https://download.postgresql.org/pub/repos/yum/reporpms/EL-9-x86_64/pgdg-redhat-repo-latest.noarch.rpm

# dnf -y module disable postgresql

# dnf install -y postgresql16-devel postgresql16-server

Note

On the Red Hat Enterprise Linux, the package name postgresql conflicts to the default one at the distribution, thus, unable to install the packages from PGDG. So, disable the postgresql module by the distribution, using dnf -y module disable postgresql.

PG-Strom Installation

RPM Installation

PG-Strom and related packages are distributed from HeteroDB Software Distribution Center. If you repository definition has been added, not many tasks are needed.

We provide individual RPM packages of PG-Strom for each PostgreSQL major version. pg_strom-PG15 package is built for PostgreSQL v15, and pg_strom-PG16 is also built for PostgreSQL v16.

It is a restriction due to binary compatibility of extension modules for PostgreSQL.

# dnf install -y pg_strom-PG16

That's all for package installation.

Installation from the source

For developers, we also introduces the steps to build and install PG-Strom from the source code.

Getting the source code

Like RPM packages, you can download tarball of the source code from HeteroDB Software Distribution Center. On the other hands, here is a certain time-lags to release the tarball, it may be preferable to checkout the master branch of PG-Strom on GitHub to use the latest development branch.

$ git clone https://github.com/heterodb/pg-strom.git

Cloning into 'pg-strom'...

remote: Counting objects: 13797, done.

remote: Compressing objects: 100% (215/215), done.

remote: Total 13797 (delta 208), reused 339 (delta 167), pack-reused 13400

Receiving objects: 100% (13797/13797), 11.81 MiB | 1.76 MiB/s, done.

Resolving deltas: 100% (10504/10504), done.

Building the PG-Strom

Configuration to build PG-Strom must match to the target PostgreSQL strictly. For example, if a particular strcut has inconsistent layout by the configuration at build, it may lead problematic bugs; not easy to find out.

Thus, not to have inconsistency, PG-Strom does not have own configure script, but references the build configuration of PostgreSQL using pg_config command.

If PATH environment variable is set to the pg_config command of the target PostgreSQL, run make and make install.

Elsewhere, give PG_CONFIG=... parameter on make command to tell the full path of the pg_config command.

$ cd pg-strom/src

$ make PG_CONFIG=/usr/pgsql-16/bin/pg_config

$ sudo make install PG_CONFIG=/usr/pgsql-16/bin/pg_config

Post Installation Setup

Creation of database cluster

Database cluster is not constructed yet, run initdb command to set up initial database of PostgreSQL.

The default path of the database cluster on RPM installation is /var/lib/pgsql/<version number>/data.

If you install postgresql-alternatives package, this default path can be referenced by /var/lib/pgdata regardless of the PostgreSQL version.

# su - postgres

$ /usr/pgsql-16/bin/initdb -D /var/lib/pgdata/

The files belonging to this database system will be owned by user "postgres".

This user must also own the server process.

The database cluster will be initialized with locale "en_US.UTF-8".

The default database encoding has accordingly been set to "UTF8".

The default text search configuration will be set to "english".

Data page checksums are disabled.

fixing permissions on existing directory /var/lib/pgdata ... ok

creating subdirectories ... ok

selecting dynamic shared memory implementation ... posix

selecting default max_connections ... 100

selecting default shared_buffers ... 128MB

selecting default time zone ... Asia/Tokyo

creating configuration files ... ok

running bootstrap script ... ok

performing post-bootstrap initialization ... ok

syncing data to disk ... ok

initdb: warning: enabling "trust" authentication for local connections

You can change this by editing pg_hba.conf or using the option -A, or

--auth-local and --auth-host, the next time you run initdb.

Success. You can now start the database server using:

pg_ctl -D /var/lib/pgdata/ -l logfile start

Setup postgresql.conf

Next, edit postgresql.conf which is a configuration file of PostgreSQL.

The parameters below should be edited at least to work PG-Strom.

Investigate other parameters according to usage of the system and expected workloads.

- shared_preload_libraries

- PG-Strom module must be loaded on startup of the postmaster process by the

shared_preload_libraries. Unable to load it on demand. Therefore, you must add the configuration below. shared_preload_libraries = '$libdir/pg_strom'

- PG-Strom module must be loaded on startup of the postmaster process by the

- max_worker_processes

- PG-Strom internally uses several background workers, so the default configuration (= 8) is too small for other usage. So, we recommand to expand the variable for a certain margin.

max_worker_processes = 100

- shared_buffers

- Although it depends on the workloads, the initial configuration of

shared_buffersis too small for the data size where PG-Strom tries to work, thus storage workloads restricts the entire performance, and may be unable to work GPU efficiently. - So, we recommend to expand the variable for a certain margin.

shared_buffers = 10GB- Please consider to apply SSD-to-GPU Direct SQL Execution to process larger than system's physical RAM size.

- Although it depends on the workloads, the initial configuration of

- work_mem

- Although it depends on the workloads, the initial configuration of

work_memis too small to choose the optimal query execution plan on analytic queries. - An typical example is, disk-based merge sort may be chosen instead of the in-memory quick-sorting.

- So, we recommend to expand the variable for a certain margin.

work_mem = 1GB

- Although it depends on the workloads, the initial configuration of

Expand OS resource limits

GPU Direct SQL especially tries to open many files simultaneously, so resource limit for number of file descriptors per process should be expanded.

Also, we recommend not to limit core file size to generate core dump of PostgreSQL certainly on system crash.

If PostgreSQL service is launched by systemd, you can put the configurations of resource limit at /etc/systemd/system/postgresql-XX.service.d/pg_strom.conf.

RPM installation setups the configuration below by the default.

It comments out configuration to the environment variable CUDA_ENABLE_COREDUMP_ON_EXCEPTION. This is a developer option that enables to generate GPU's core dump on any CUDA/GPU level errors, if enabled. See CUDA-GDB:GPU core dump support for more details.

[Service]

LimitNOFILE=65536

LimitCORE=infinity

#Environment=CUDA_ENABLE_COREDUMP_ON_EXCEPTION=1

Start PostgreSQL

Start PostgreSQL service.

If PG-Strom is set up appropriately, it writes out log message which shows PG-Strom recognized GPU devices. The example below recognized two NVIDIA A100 (PCIE; 40GB), and displays the closest GPU identifier foe each NVME-SSD drive.

# systemctl start postgresql-16

# journalctl -u postgresql-16

Jun 02 17:28:45 buri.heterodb.com postgres[20242]: 2024-06-02 17:28:45.989 JST [20242] LOG: HeteroDB Extra module loaded [api_version=20240418,cufile=off,nvme_strom=off,githash=3ffc65428c07bb3c9d0e5c75a2973389f91dfcd4]

Jun 02 17:28:45 buri.heterodb.com postgres[20242]: 2024-06-02 17:28:45.989 JST [20242] LOG: HeteroDB License: { "version" : 2, "serial_nr" : "HDB-TRIAL", "issued_at" : "2024-06-02", "expired_at" : "2099-12-31", "nr_gpus" : 1, "gpus" : [ { "uuid" : "GPU-13943bfd-5b30-38f5-0473-78979c134606" } ]}

Jun 02 17:28:45 buri.heterodb.com postgres[20242]: 2024-06-02 17:28:45.989 JST [20242] LOG: PG-Strom version 5.12.el9 built for PostgreSQL 16 (githash: )

Jun 02 17:28:48 buri.heterodb.com postgres[20242]: 2024-06-02 17:28:48.114 JST [20242] LOG: PG-Strom binary built for CUDA 12.4 (CUDA runtime 12.5)

Jun 02 17:28:48 buri.heterodb.com postgres[20242]: 2024-06-02 17:28:48.114 JST [20242] WARNING: The CUDA version where this PG-Strom module binary was built for (12.4) is newer than the CUDA runtime version on this platform (12.5). It may lead unexpected behavior, and upgrade of CUDA toolkit is recommended.

Jun 02 17:28:48 buri.heterodb.com postgres[20242]: 2024-06-02 17:28:48.114 JST [20242] LOG: PG-Strom: GPU0 NVIDIA A100-PCIE-40GB (108 SMs; 1410MHz, L2 40960kB), RAM 39.50GB (5120bits, 1.16GHz), PCI-E Bar1 64GB, CC 8.0

Jun 02 17:28:48 buri.heterodb.com postgres[20242]: 2024-06-02 17:28:48.117 JST [20242] LOG: [0000:41:00:0] GPU0 (NVIDIA A100-PCIE-40GB; GPU-13943bfd-5b30-38f5-0473-78979c134606)

Jun 02 17:28:48 buri.heterodb.com postgres[20242]: 2024-06-02 17:28:48.117 JST [20242] LOG: [0000:81:00:0] nvme6 (NGD-IN2500-080T4-C) --> GPU0 [dist=9]

Jun 02 17:28:48 buri.heterodb.com postgres[20242]: 2024-06-02 17:28:48.117 JST [20242] LOG: [0000:82:00:0] nvme3 (INTEL SSDPF2KX038TZ) --> GPU0 [dist=9]

Jun 02 17:28:48 buri.heterodb.com postgres[20242]: 2024-06-02 17:28:48.117 JST [20242] LOG: [0000:c2:00:0] nvme1 (INTEL SSDPF2KX038TZ) --> GPU0 [dist=9]

Jun 02 17:28:48 buri.heterodb.com postgres[20242]: 2024-06-02 17:28:48.117 JST [20242] LOG: [0000:c6:00:0] nvme4 (Corsair MP600 CORE) --> GPU0 [dist=9]

Jun 02 17:28:48 buri.heterodb.com postgres[20242]: 2024-06-02 17:28:48.117 JST [20242] LOG: [0000:c3:00:0] nvme5 (INTEL SSDPF2KX038TZ) --> GPU0 [dist=9]

Jun 02 17:28:48 buri.heterodb.com postgres[20242]: 2024-06-02 17:28:48.117 JST [20242] LOG: [0000:c1:00:0] nvme0 (INTEL SSDPF2KX038TZ) --> GPU0 [dist=9]

Jun 02 17:28:48 buri.heterodb.com postgres[20242]: 2024-06-02 17:28:48.117 JST [20242] LOG: [0000:c4:00:0] nvme2 (NGD-IN2500-080T4-C) --> GPU0 [dist=9]

Jun 02 17:28:48 buri.heterodb.com postgres[20242]: 2024-06-02 17:28:48.217 JST [20242] LOG: redirecting log output to logging collector process

Jun 02 17:28:48 buri.heterodb.com postgres[20242]: 2024-06-02 17:28:48.217 JST [20242] HINT: Future log output will appear in directory "log".

Jun 02 17:28:48 buri.heterodb.com systemd[1]: Started PostgreSQL 16 database server.

Creation of PG-Strom Extension

At the last, create database objects related to PG-Strom, like SQL functions.

This steps are packaged using EXTENSION feature of PostgreSQL. So, all you needs to run is CREATE EXTENSION on the SQL command line.

Please note that this step is needed for each new database.

If you want PG-Strom is pre-configured on new database creation, you can create PG-Strom extension on the template1 database, its configuration will be copied to the new database on CREATE DATABASE command.

$ psql -U postgres

psql (16.3)

Type "help" for help.

postgres=# CREATE EXTENSION pg_strom ;

CREATE EXTENSION

That's all for the installation.

PostGIS Installation

PG-Strom supports execution of a part of PostGIS functions on GPU devices. This section introduces the steps to install PostGIS module. Skip it on your demand.

PostGIS module can be installed from the yum repository by PostgreSQL Global Development Group, like PostgreSQL itself. The example below shows the command to install PostGIS v3.4 built for PostgreSQL v16.

# dnf install postgis34_16

Start PostgreSQL server after the initial setup of database cluster, then run CREATE EXTENSION command from SQL client to define geometry data type and SQL functions for geoanalytics.

postgres=# CREATE EXTENSION postgis;

CREATE EXTENSION

Installation on Ubuntu Linux

Although PG-Strom packages are not available for Ubuntu Linux right now, you can build and run PG-Strom from the source code.

After the installation of Ubuntu Linux, install the MOFED driver, CUDA Toolkit, and PostgreSQL for Ubuntu Linux, respectively.

Next, install the heterodb-extra package.

A .deb package for Ubuntu Linux is provided, so please obtain the latest version from the SWDC.

$ wget https://heterodb.github.io/swdc/deb/heterodb-extra_5.4-1_amd64.deb

$ sudo dpkg -i heterodb-extra_5.4-1_amd64.deb

Checkout the source code of PG-Strom, build and install as follows.

At this time, do not forget to specify the target PostgreSQL by pg_config.

Post-installation configuration is almost same as for Red Hat Enterprise Linux or Rocky Linux.

$ git clone https://github.com/heterodb/pg-strom.git

$ cd pg-strom/src

$ make PG_CONFIG=/path/to/pgsql/bin/pg_config -j 8

$ sudo make PG_CONFIG=/path/to/pgsql/bin/pg_config install

However, if you use a packaged PostgreSQL and start it by systemctl command, the PATH environment variable will be cleared (probably for security reasons).

As a result, the script launched to build the GPU binary on the first startup will not work properly.

To avoid this, if you are using Ubuntu Linux, add the following line to /etc/postgresql/main/PGVERSION/environment.

/etc/postgresql/PGVERSION/main/environment:

PATH='/usr/local/cuda/bin:/usr/bin:/bin'